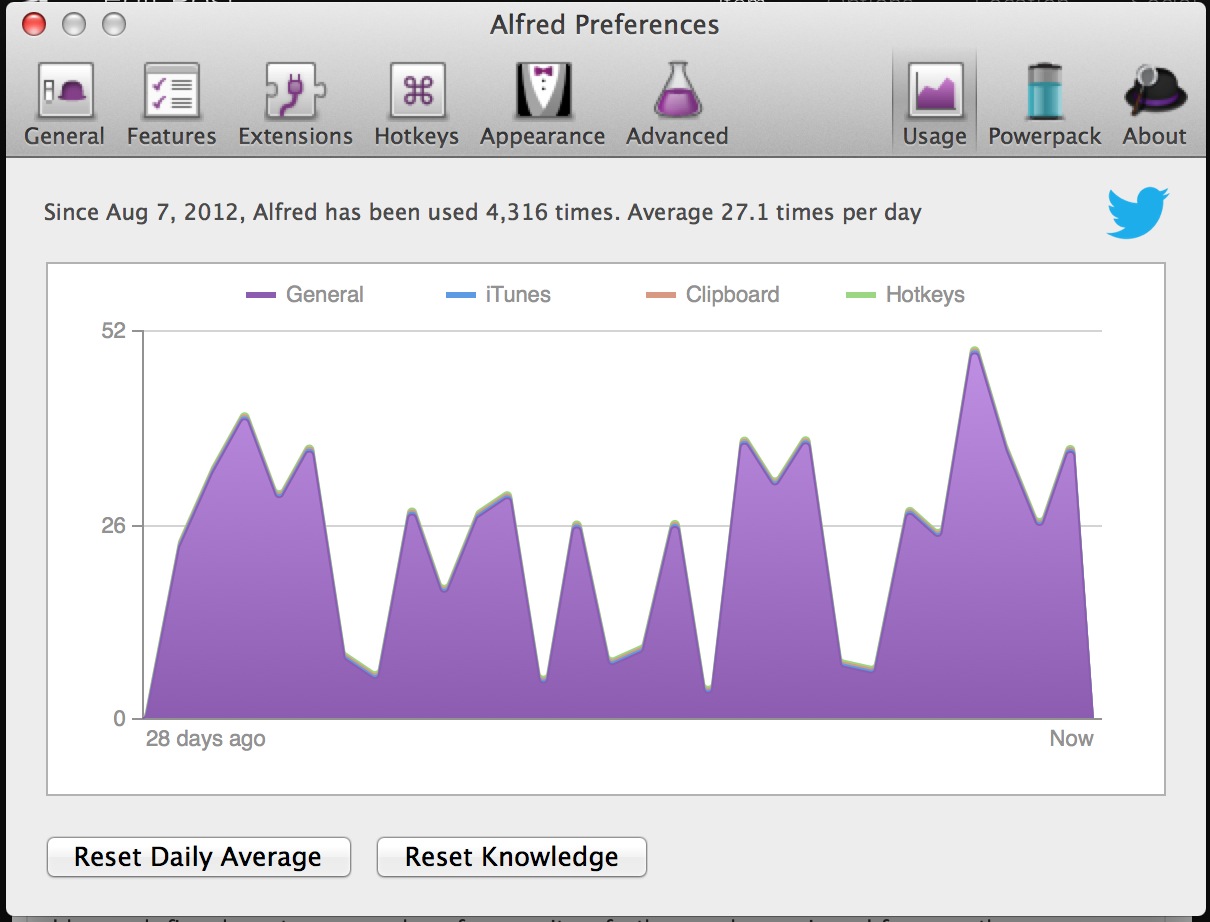

This is the 3rd post in a 3-post series examining physical UIs. The first 2 posts focused on the UIs that are dominant in the PC environment. The first post focused on the keyboard, detailing the ins and outs of Alfred, and the second post focused on the trackpad, illustrating the extensibility of BetterTouchTool (BTT).

In the BTT post, I asserted that BTT is the end-all-be-all of trackpad based UIs given the hardware constraints of modern trackpads. In short, BTT allows you to map any gesture that the hardware trackpad can recognize to any software function. In input-output terms, BTT can map any trackpad-recognizable human input to any desired software output. Nothing more can be done in this front.

However, in the Alfred post, I concluded by stating that Alfred is not the end-all-be-all of "command line" user interfaces. There is still significant room for improvement in command line UIs. This post will explore what that end-all-be-all might look like. But first, let's review what command line UIs are, and look at how they've evolved over the past 30 years.

What is a command line UI?

The definition of a command line UI is quite broad. Command line UIs are not just traditional command lines, but can take many forms and shapes. A simple definition: if you can command the computer to do something in a character-driven format (i.e. the keyboard), then it's a command-line UI. That means that the mouse and trackpad are out of the question since they don't utilize characters. Thinking a bit broader, command line UIs would also exclude reading brain signals, Kinect-like motion controls, or any other input form that cannot be translated into unambiguous human-readable characters. It's important to note that command line UIs aren't limited to they keyboard - command line UIs can manifest if the computer can take another form of input and translate it into unambiguous characters.

A history of command line UIs

The fist computers utilized the most low-level form of command line UIs. You told the computer exactly what you wanted it to do: which memory addresses to put data into, which memory addresses to read data from, and how to manipulate data. It doesn't get much lower-level than that. Of course, laymen could never utilize such a interface to get anything done. The requisite knowledge to use this kind of computer was beyond daunting. With time, computers evolved so that the command lines could understand higher level commands, such as reading and saving files and launching applications within a filesystem, as opposed to memory addresses. They abstracted away the complexity of memory addresses and other low-level system functions so the user didn't have to worry about them. Even still, they were hardly friendly. Could you imagine teaching your grandmother to type "launch Windows.exe" to boot up her computer? That's exactly how I booted into Windows 95 on my first computer.

In 1984, Apple unveiled the Macintosh, with the promise to banish the command line and all of its unfriendliness and ugliness. And it did. Except that it didn't.

As graphical UIs (GUIs) grew through the 80s and into the 90s, the command line of yore was relegated to a simple OS-level file search. And it was a slow and ugly search hidden behind multiple UI layers in both Windows and Mac OSes. Do you remember how many clicks it took to access the search function in Windows XP? 3. That's horrific.

Then, Google happened. Google re-ignited the concept of using a single text box as the entry to point any content you could conceivably want to find on the Internet. There's 999,435,454,456,876,655,345,945,888,234,457,962,235,654 pages on the internet, and Google realized that there's no way you could ever browse through that volume of data using a mouse. After all, the mouse is slow and inefficient. Luckily, the keyboard makes it extremely easy to solve the problem of sifting through hordes of data. Here's a simple way to think about how the keyboard does that: lets say there's 37 keys on the keyboard - one for every letter, number and space. Under this assumption, there are 37^10 = 4.8 * 10^15 possible combinations of 10-character queries. You can enter your desired character combination, and Google can cross-reference your character-combination against every publicly available document on the Internet to give you 10 blue links.

As Google grew in size and prominence, Microsoft grew jealous of Google. In Windows Vista, Microsoft completely revamped search and brought it directly to the Start menu. And it was a pretty good search, spanning not only local-OS files, but applications and system settings. Apple did the same in one of the first few releases of OSX and called it Spotlight. However, both Microsoft and Apple failed to to plug their native OS search engines into web search engines.

As Google grew through the 2000s, so did its competition. Everyone wanted a piece of Google's search revenue, not just Microsoft and Yahoo. Thousands of smaller vertical search engines, such as Amazon, eBay, Zappos, Wikipedia, Yelp, Mapquest, MySpace, Facebook, ZocDoc and Quora knew they could search specific domains far better than the generic Google could. As each of these companies grew, they iterated and developed their searches. Some became quite good within their respective fields, but no matter how good they became, they all faced the same accessibility problem: you have to navigate to their websites before you can search them. What a pain!

In the mid 2000s, a developer recognized this growing accessibility problem, and created QuickSilver to solve the accessibility problem. Quicksilver, like Alfred, made it very easy to invoke any web-search engine from anywhere in OSX. Unfortunately, the QuickSilver developer stopped developing the app even though its users loved it. In QuickSilver's absence, Alfred emerged to take QuickSilver's place as the prominent 3rd party search utility for OSX, doing everything that Spotlight should have done.

And so that brings us into the 2000-teens. What's next for the command line?

Search engine overload

Today, there are thousands of vertical search engines. Each is suited to its particular field. In many cases, these vertical search engines can do a better job than Google can within their respective areas of expertise. So why don't people stop using Google and use these other search engines that can deliver better results?

The beauty of Google is that it's incredibly easy to remember and to use. If you need to find something online, just Google it. Or you could remember Quora for questions, Wolfram Alpha for computations, Zappos for shoes, IMDB for movies, Amazon for random merchandise, Kayak for airplane tickets, CNN for the news, TechCrunch for tech news, Economist for economic opinion pieces, etc. Computer geeks and power users like me won't have a tough time remembering which engines to use for which purposes, but most people will. Google solves the accessibility and memorization problem, even if Google delivers inferior search results compared to an optimized domain-specific search engine. For that incredibly simple reason, Google wins while everyone else loses.

Unfortunately, Alfred doesn't solve the search-engine-memorization problem. It reduces the friction between you and the search engine that you'd like to use, but it still requires that you know which one you want to use, and that you specify that detail to Alfred every time you search. In this sense, Alfred isn't particularly intelligent. It can't plug into anything beyond an inherently limited set of pre-configured search engines.

The technology that solves the memorization problem is the future of command-line interfaces. And such technology is commercially available today, sort of. Google, Apple, and Microsoft all recognize this problem and the tremendous value they can create by solving it. But they're employing very different strategies to solve it. Let's start with Google, the command-line incumbent.

Google's solution to this problem is "Google Now". Google Now allows you to speak (which is translated into text in the background, hence making it a command line UI) in human language. If Google Now can make sense of your question, it will attempt to answer it succinctly. Google Now is paradigmatically asymmetric to the Google we've all come to know and love. The original Google didn't answer your questions - it sent you elsewhere for someone else to answer your question. Google Now is fundamentally different. Google Now attempts to understand the meaning of your question and answer it, without returning 10 blue links. Of course, Google Now isn't that smart; you can stump it pretty easily, and when you do, Google Now reverts back to the old Google and gives you 10 blue links. But there's a second aspect to command-line interfaces beyond looking up information, and that's "doing stuff," such as sending emails, creating reminders, and sharing content. Google Now only attempts to answer your questions. Unlike Alfred, Google Now doesn't "do" much. It can't send emails, it can't create reminders, and it can't add songs to playlists; but it's great at looking up flight information.

Apple's solution to the search engine overload problem is Siri. As far as the user is concerned, Siri tries to do same thing as Google Now - it takes your query (your command) and tries to give you an answer, or do what you tell it to do. Unlike Google Now, Siri is actually quite good at "doing stuff", like playing a song, sending something to a friend, or creating a reminder. However, for everything Siri does right with regards to "doing", Siri fails when it comes to searching. Apple hasn't indexed the entire Internet and built an enormous Knowledge Graph on top of it. Right now, Siri is very limited in the information it can lookup. Siri relies on a variety of vertical knowledge bases to lookup information, including Yelp for restaurants, OpenTable for reservations, Yahoo for sports, IMDB for movies, and Wolfram Alpha for computations. Ironically enough, when Siri knows that none of these vertical search engines is sufficient for the task at hand, it reverts to Google, who gives you 10 blue links. Siri is limited in what it can do: Siri today only works with a select few services that Apple integrated into iOs. However, I suspect that iOs 7 (and if not iOs 7, then iOs 8) will usher in Siri APIs so that developers can plug into Siri. When that happens, Siri will determine which app is best suited for a given command, then hand off the search or action parameters to the app so it can search or "do" using context-specific criteria. This has never been done, but it's the logical progression of Siri technology; the foundation is in place and it will happen, it's just a question of when and how well it will work. Make no mistake about it, Siri is Apple's Google-competitor. Apple is smart enough to know that it can't beat Google head-on. Apple has watched Microsoft try to compete with Google head-on: Bing isn't as good at search, has never been close to profitable, and has no viable path to profitability given its current trajectory and strategy. Apple knows that the only way to take down a giant like Google is to compete asymmetrically (see The Innovator's Dilemma and The Innovator's Solution by Clayton Christensen for more on asymmetric technological competition). Siri asymmetrically competes with Google by attempting to make Google irrelevant.

And last, and most certainly least, is Microsoft. Microsoft hasn't released a search or action driven solution yet to compete with Google Now or Siri, though it has taken steps to give you the "answer" you're looking for instead of 10 blue links in Bing. However, Bing only tries to return answers for common searches such as celebrities. I'm certain Microsoft is working on a consumer-facing product to compete in this space with Siri and Google Now, it's just not out yet. I would guess that whatever Microsoft does, it will leverage Facebook's new Graph Search to better tailor the answer. Microsoft knows it's the underdog and late-comer relative to Google and Apple in this front, and it needs all the help and data it can get to compete. Given Microsoft's technological and financial relationship with Facebook, utilizing Facebook's Graph Search seems even more likely.

All for one, or one for all?

Apple and Google are are approaching the same problem - how to implement the best the command line user experience - coming from opposite directions. And it shows. Today, Siri excels where Google Now fails - doing stuff - and Siri fails where Google Now excels - looking stuff up. This makes sense given the historical strengths of each company.

As Google Now and Siri mature, they will add features where they're currently lacking. However, Apple and Google are going about this in radically different ways. Google is attempting to determine the answer to your question, and do whatever it is that you need it to do, all on its own. Apple sees itself as a middle man, whose job it is to determine which of its developers is best qualified to perform a given task, be it search or doing stuff. Once Apple has made that determination, it will hand off that the relevant parameters to that application so that it can deliver the results that you want.

My first blog post examined this topic in quite a bit more depth, looking at the problem in a historical light of "monolith vs an army of developers." Historically, the army of developers have won all the wars that I'm aware of. However, given how dominant Android is today, and how limited iOs is because of physical distribution and price-point limitations, it's highly likely that both strategies continue to co-exist for quite some time, even if one method proves to be materially better than the other. When Steve Jobs declared "thermonuclear war" on Android in early 2010, he really just meant that Apple was buying Siri, with hopes that Siri could eventually destroy Google. Apple bought Siri in April 2010.

Siri and Google Now represent the end-all-be-all of command line UIs. There's literally nothing you as a human can do to generate the kind of nuanced queries that computers can parse to deliver a desirable result that's faster than talking in your native tongue. Sure, you can swipe your trackpad far more quickly than you can speak "go to the next tab", but can you wave at your Kinect and have it search for the name of the general of the Confederacy during the American Civil War? Even technologies like Google Glass, even if perfected, wouldn't provide a faster UI mechanism than speaking for complex queries (though Google Glass will most certainly open new UIs for certain types of queries, namely image-driven queries); in fact, in all likelihood, one of the headline features of Google Glass 1.0 will be seamlessly integrated Google Now. Google Glass will perpetuate Google Now, not replace it. There's nothing better than asking a question at any time, and hearing the answer spoken back by a computerized female immediately…

Unless, of course, the computer can deliver the result you want before you explicitly tell it anything at all. Serendipity anyone? To be continued…